A Review of Gesture Schemes

To begin analyzing gesture commands, I need to pick a gesture scheme. This week, I have been looking at commercial and experimental gesture control devices and what commands they have installed. I have found some big name like John Underkoffler’s work and smaller thesis projects. Below is a list of the work I have found, the commands, and my thoughts on them.

##Time Breakdown

- Research on gesture scheme: 7 hrs 25 mins

- Research on designing Myo gestures: 1 hr 45 mins

- Summarizing & reviewing research: 2 hrs 30 mins

- Total: 11 hr 40 mins

Underkoffler and K. Parent, “System and Method for Gesture Based Control System,” United States Patent, 2014.

http://www.freepatentsonline.com/20150205364.pdf

The most interested scheme I found was by John Underkoffler; gesture control legend. Underkoffler started at MIT’s Media Lab where he was picked up by Spielberg to design the gestures in Minority Report. He is currently working on gesture controlled commercial products, like the Mezzanine (http://www.oblong.com/mezzanine/benefits/) and more holly wood movies.

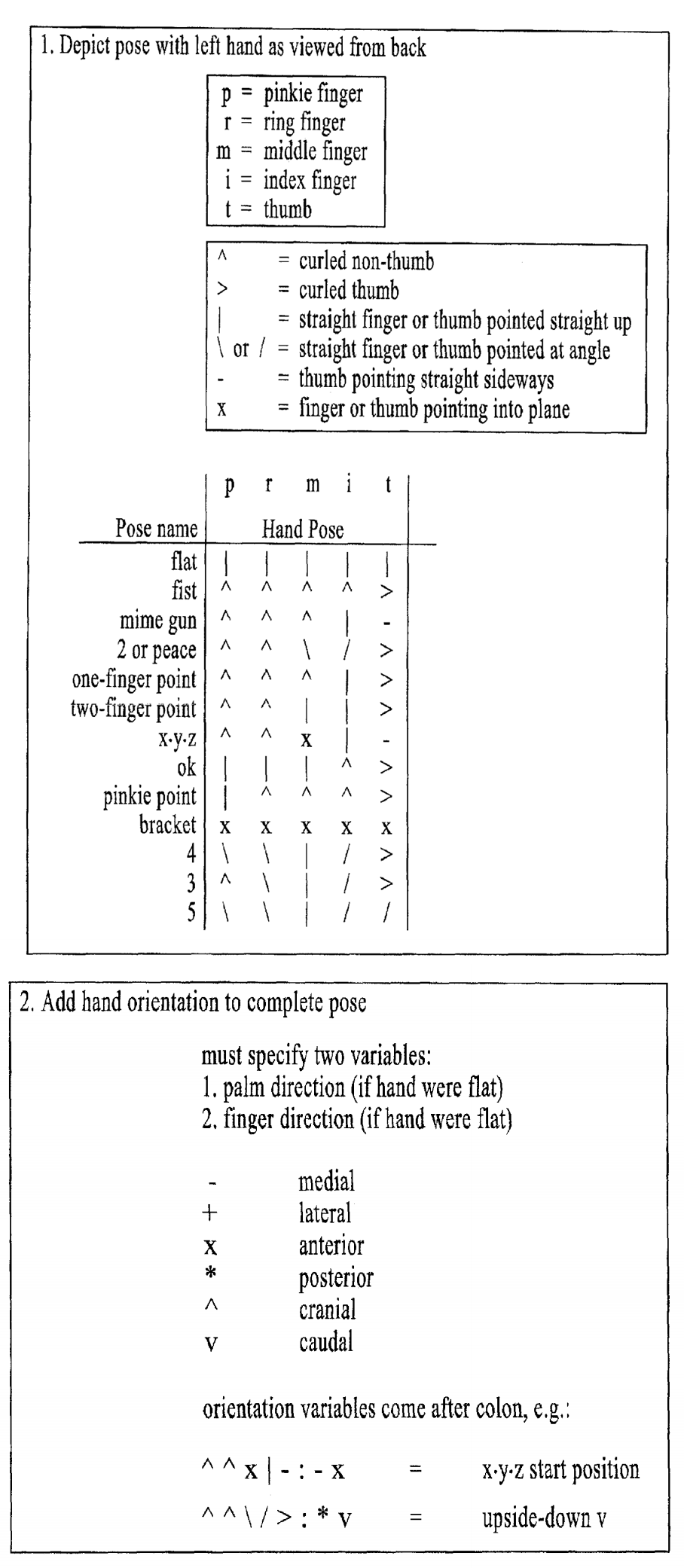

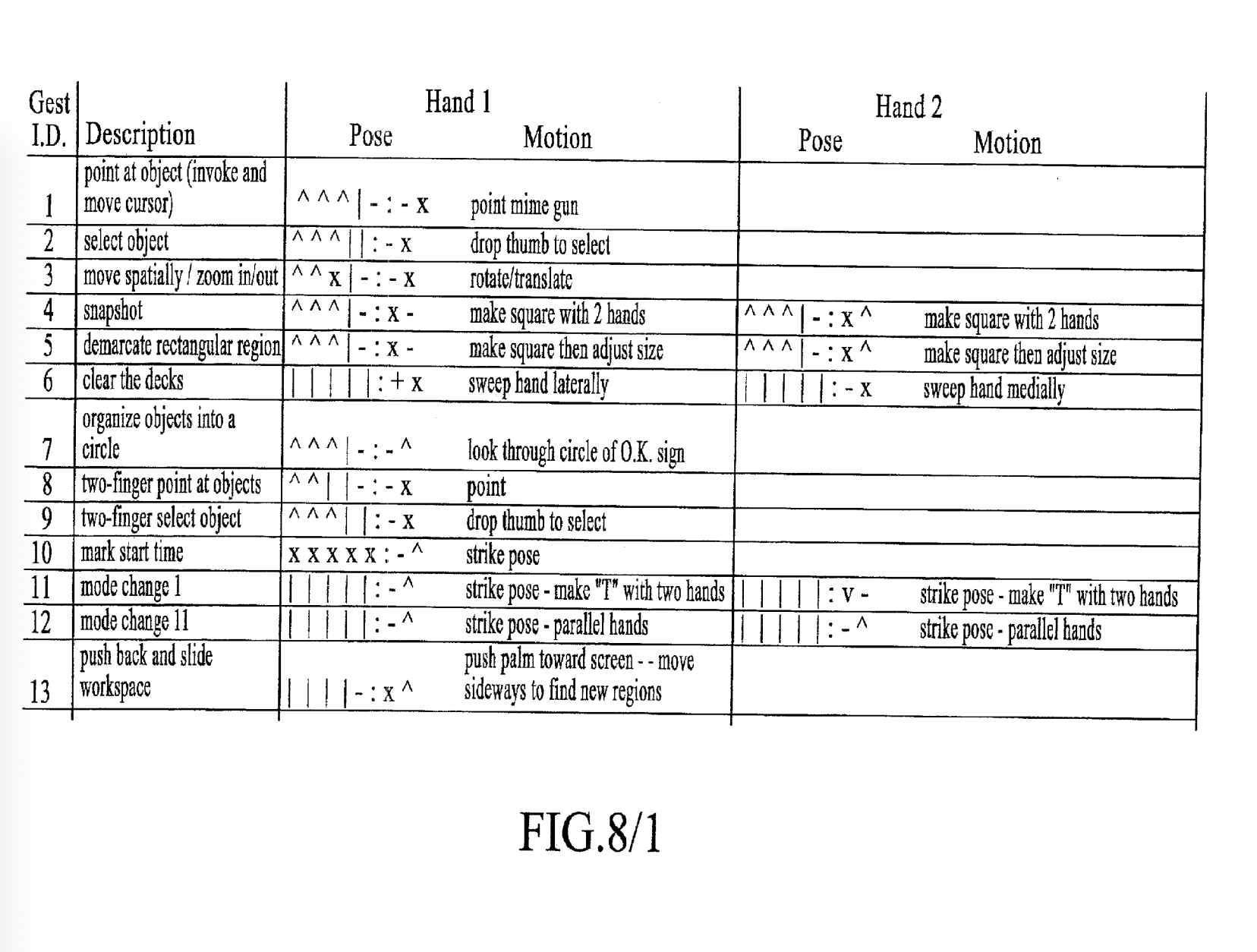

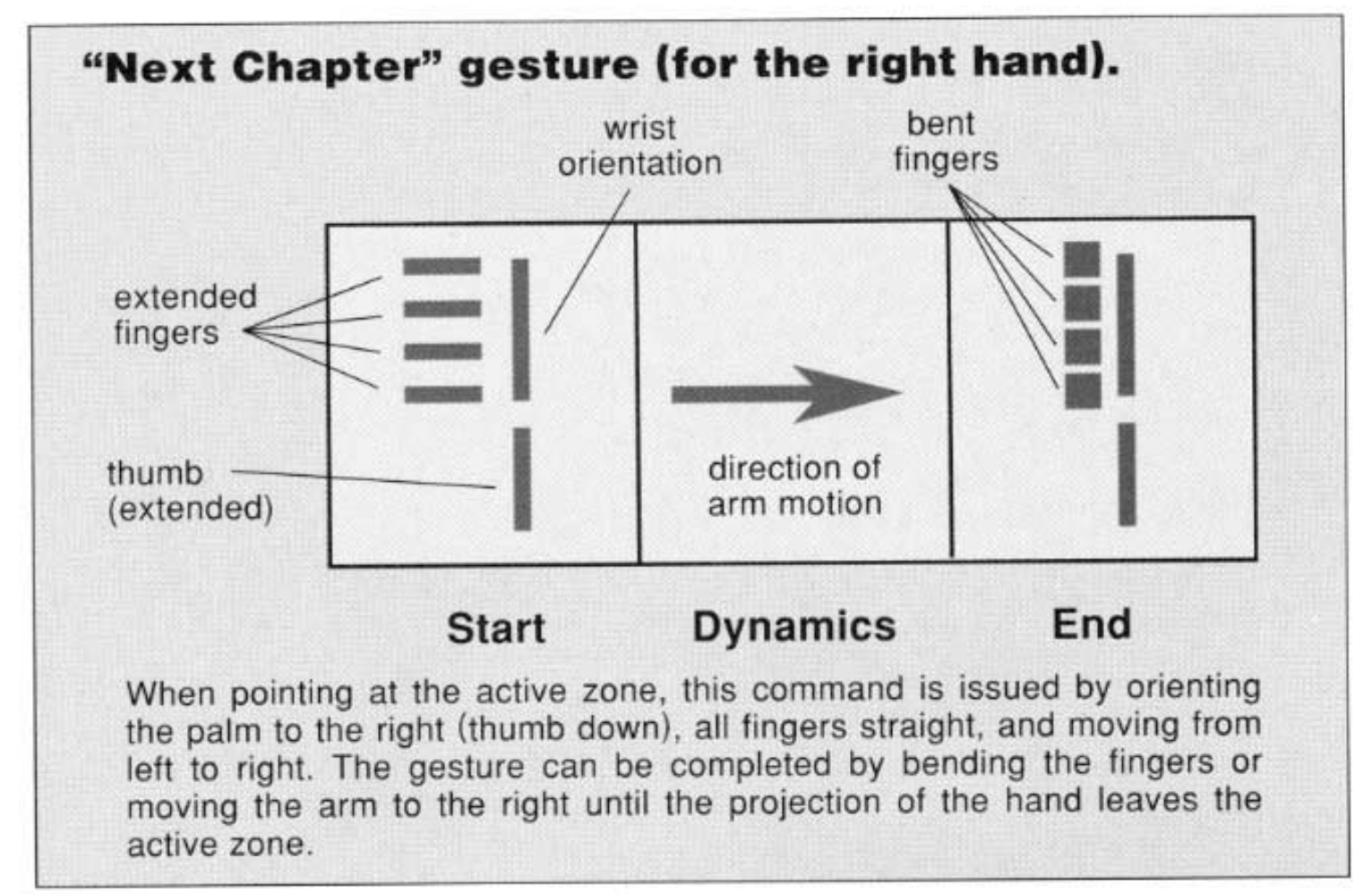

The scheme I found was listed in a patent filed last September (2014) and only published this July. In this patent Underkoffler and Kevin Parent present a two handed gesture themes with a lot of fine control using fingers. The scheme is “encoded” and needs a key to be read. I have included an image of this key if anyone is interested in using it.

The proposed gestures mostly only need one arm/hand. The commands seem to focus on manipulating media and organizing a digital workspace. And this is what the patent is protecting, this “gestural vocabulary”.

My biggest concern with this gesture scheme is it requires two hands and I can only develop for one. Another is that I do not see any command that addresses the “live mic” issue with gesture control. Meaning that unlike touch control, gesture is always on and always tracking. This means it can misread your movements as a command. Overall though, this is Underkoffler’s work and he’s been doing this longer than any of us.

Chudgar and S. Mukerjee, “S Control: Accelerometer-based Gesture Recognition for Media Control,” presented at the International Conference on Advances in Electronics, Computers and Communications, 2014.

http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7002459

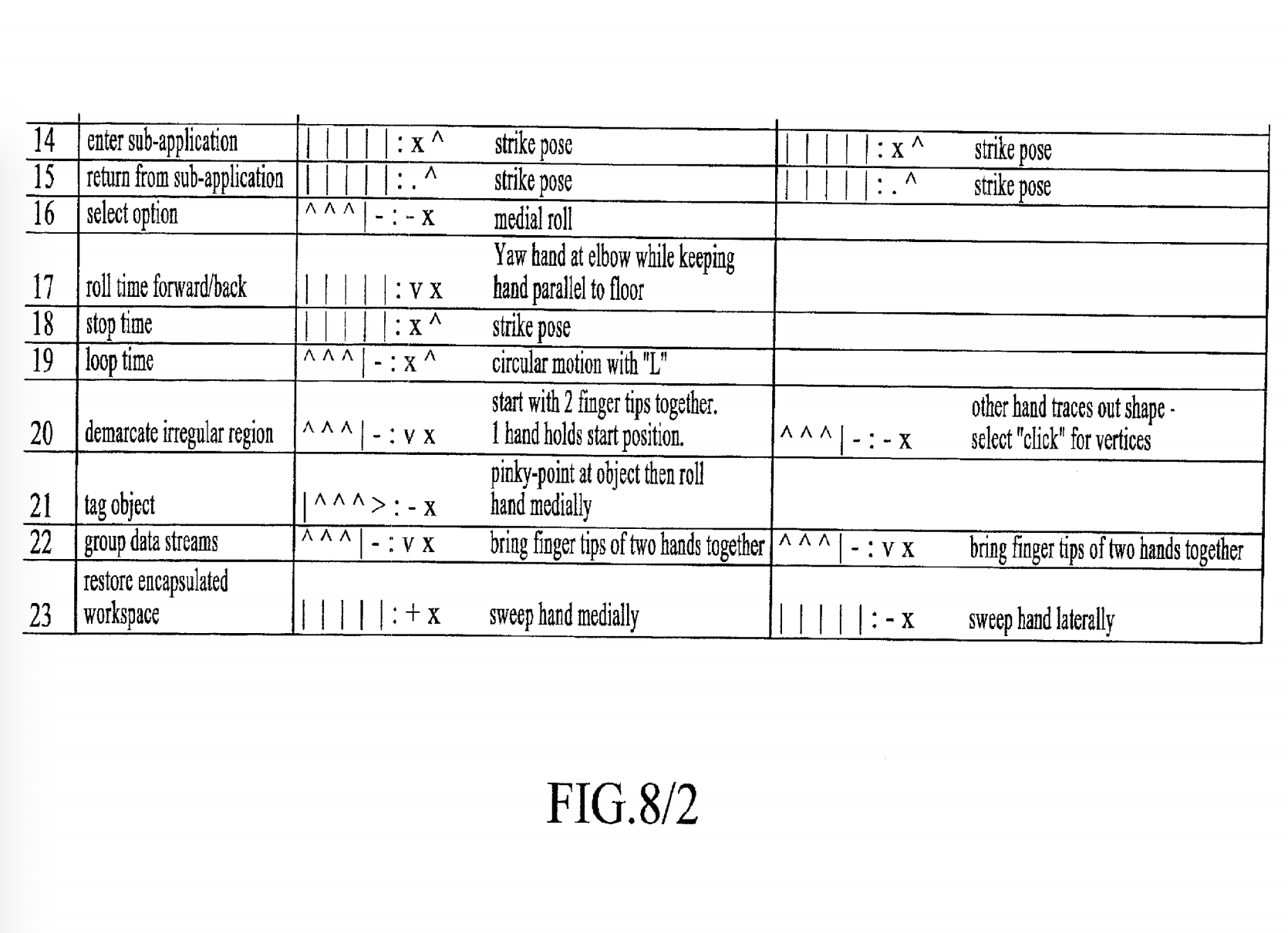

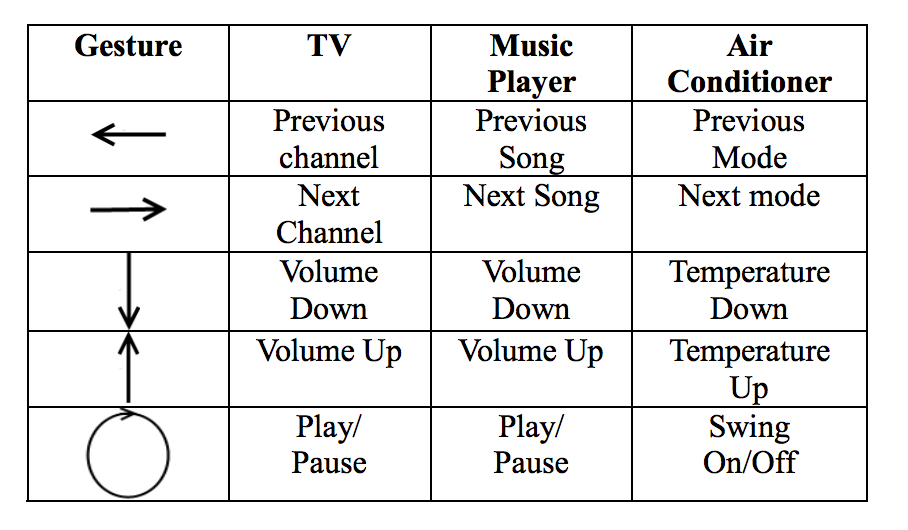

In this conference paper Chudgar and Mukerjee built a gesture control scheme for Samsung Galaxy gear, which is a collection of smart watches. They developed a system based on the device’s accelerometer. Instead of having a control do one thing, like Underkoffler’s patent, the gesture scheme can be mapped to different commands depending on what application is open

The gestures are mostly directional; up, down, left, right. The last two are to make a circle clockwise and to draw an “S” shape. The controls were actually based on gesture proposed by Nokia.

For Underkoffler’s, the only complaint I had was there was nothing addressing the “live mic” issue. This team does though. The “S” gesture is not mapped to a command as you can see in the chart above. The gesture is instead determining whether or not to listen for commands. The user will preform the “s” shape gesture to start performing commands and the device start listening. But there is one issue with this scheme. The team says, “while performing the ‘Down’ gesture, the hand first moves upwards and then downwards. The upward movement can in some cases be erroneously detected as ‘Up’.” This is something I have to remember in the future. The same thing can be applied to the pinch-to-zoom technique. To pinch-in, you first have to open your hand. This looks like pinch-out command at first.

Baudel and M. Beaudouin-Lafon, “Charade: remote control of objects using free-hand gestures,” Commun. ACM, vol. 36, pp. 28-35, 1993.

http://delivery.acm.org/10.1145/160000/159562/p28-baudel.pdf?ip=144.118.47.237&id….83c

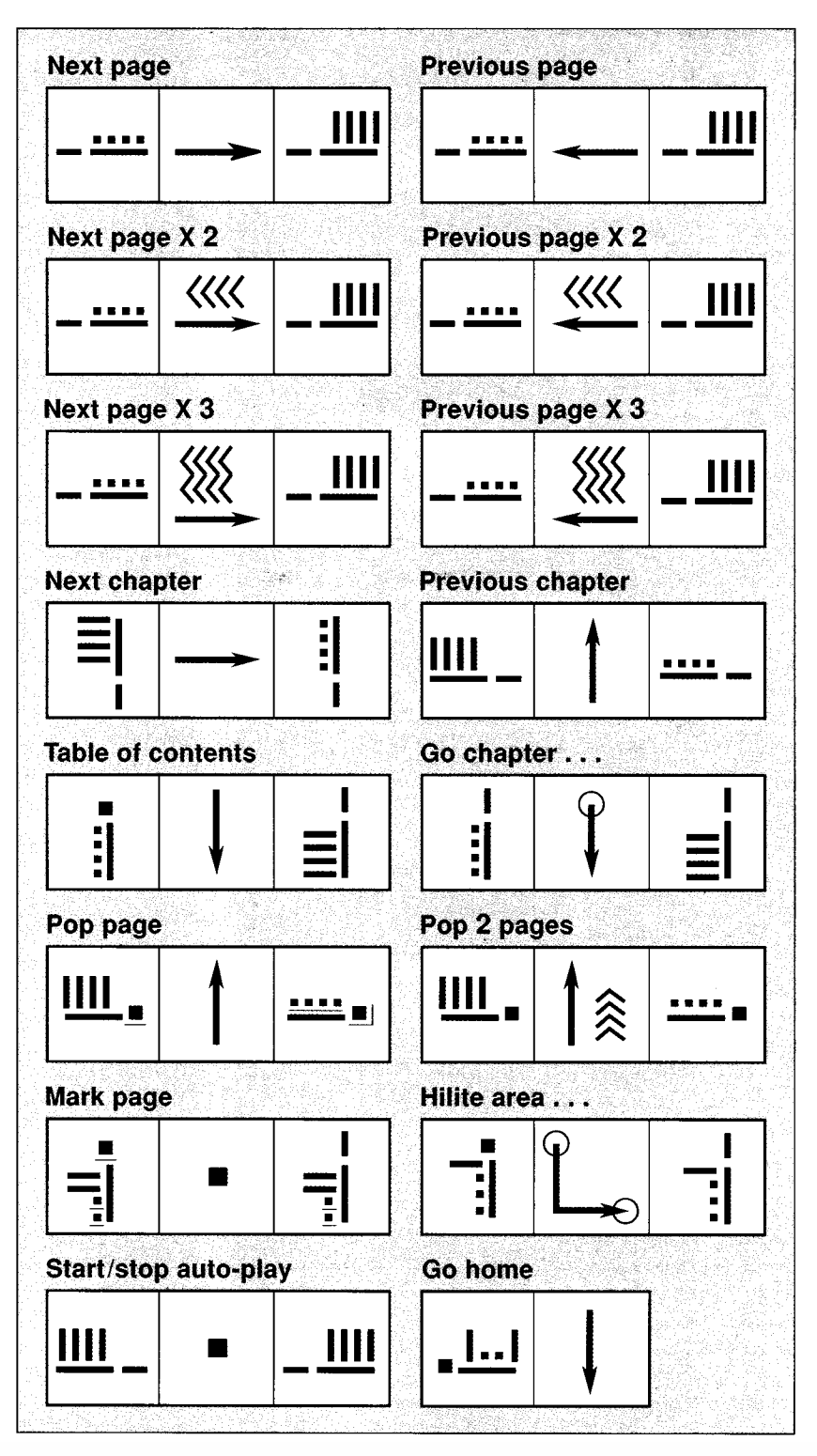

The Charade project is from 1993 and started to build the foundation for gesture control. It is a large physical glove to control computer-aided presentations. This scheme uses a key as well. The commands all revolve around commands for presentations; next page, previous page. This system is only using one hand/arm. That makes it easier for me to see its application to the Myo. There are also a lot of similarities between these devices. Controlling presentations is the Myo’s main marketing selling point right now. It is what the device is good at. The actual commands to do line up though. Just the purpose.

The “>>>” symbol indicate how many time to open your hand during the gesture. For example, opening once while doing the “Next page” command will move one page. Twice will move two pages.

Overall, this scheme is dated but very impressive for 1993. The scheme does not address the “live mic” issue and even says this was a problem in its usability report. It is interesting how the Charade used the dynamic section of a gesture (between the start and end pose of a command) to change the meaning of a gesture. They did report the user’s had some trouble with this though.

Alcoverro, X. Suau, J. R. Morros, A. López-Méndez, A. Gil, J. Ruiz-Hidalgo, et al., “Gesture Control Interface for Immersive Panoramic Displays,” Multimedia Tools and Applications, vol. 73, p. 26, November 2014 2014.

http://link.springer.com/article/10.1007/s11042-013-1605-7/fulltext.html

This system uses the Kinect to detect gestures, hand gestures, face identification, and the location of the head. This system only controls panoramas, still and video. A transparent menu is laid over the media. Users can play/pause the media, change volume, navigation within the media, select options on the menu, change between available ROIs, and pass control to another user. There are no visuals of the all the commands for me to paste here. But the controls to me seem pretty complex since they rely on the position of the hand, the fingers, and the head of the user.

Here is a written description of the commands though:

- Volume Up: One finger to mouth

- Volume Down: Hand on ear

- Mute: Gesture cross in front of mouth

- Play/Resume: Hands together like clapping

- Panning: Grab and pull media around with hand

- Zooming: Bring hands together or further apart

- ROI Mode: Controlled by moving hands left or right

- New User: Face identification adds users

This is a two handed system relying on a full body image (achieved through the kinect). It tracks a lot more than I can with the Myo, but it still an interested scheme. Again this is a system made for controlling media. Media consumption is something that takes up a majority of our time. So this would be a great reference if I decided to make an interface that revolves around media consumption.

##Other interesting readings done this week

http://www.cc.gatech.edu/~thad/p/031_30_Gesture/iswc04-freedigiter.pdf

An ear-mounted finger gesture system to control phones (like a bluetooth headset). Based on the fact phones were getting smaller and smaller.

http://www.cogsci.ucsd.edu/~bkbergen/cogs200/McNeill_CH3_PS.pdf

Analog study on gestures (not gesture commands). Great classifications on natural gestures.

http://www.cse.iitb.ac.in/~aamod/glive/itsp.html

A cool open source PC/Webcam based gesture recognition project.