Improving UX of Gesture Control with Touch

(Case Study)

I designed and developed an approach for improving gesture control by combining it with touch input to address its key shortcomings: speed, accuracy, and live mic syndrome. My touch-enhanced gesture control prototype replaces crucial gestures with touchscreen commands and includes a virtual clutch to lessen live mic syndrome. To test our design, I implemented the new control scheme on a generic smartphone and compared its performance to the Myo, a wearable gesture control device. Results of a user study (n=30) show that the touch-enhanced gesture control scheme is faster and more accurate when executing selection commands. Our prototype’s virtual clutch was also easier for participants to use and yielded a lower percent error.

Virtual Clutch - A command that stop and starts the listening of a gesture device. For example, the Myo armband has its users double tap their pointer and thumb fingers to toggle is the device tracks movements or not.

Skills Used

- Lean UX

- Usability Study

- Interviews

- Quantitative & Qualitative Data Analysis

- Myo Armband

- HTML5

- CSS3

- JS

- Node.js

- WebSockets

- LocalStorage API

The Challenge

An increasing number of consumer products, such as Myo and Leap Motion, have adopted gesture as the primary control scheme. However, even with its increase in popularity gesture control is hindered by major drawbacks. Common drawbacks like software and hardware sophistication affect the speed and accuracy of recognizing commands. Gesture control is also plagued by live mic syndrome (LMS). LMS comes from gesture control devices constantly monitoring user motion. The devices cannot fully differentiate movements intended as a command from those that are not. LMS causes false-positive errors, like executing commands when the user intended no command at all.

LMS is present in most gesture control devices on the market. This issue impacts a gesture system’s ability to properly read the user’s intent and further decreases its execution accuracy and speed. The most developed approach to address LMS is a virtual clutch. A virtual clutch is a reserved command that toggles whether or not a user’s movements should be interpreted as commands. This is similar to voice control’s “wake word”. Saying “Alexa” to an Amazon Echo triggers the device to begin listening.

Myo’s Virtual Clutch

Myo’s Virtual Clutch

To increase the performance of gesture control and to mitigate LMS, I designed an approach to combine gesture and touchscreen control; touch-enhanced gesture (TEG). Our TEG prototype focuses on common functions, like selecting and moving within a system. We chose to combine gesture with touchscreen because it is accessible, and does not suffer as severely from LMS.

Designing Touch-Enhanced Gesture

In order to address the shortcomings of gesture control, I propose replacing key gesture commands with touchscreen interaction, as well as using the touchscreen as a virtual clutch that communicates to the system when the user intends her gestures to be read as commands. I developed the TEG prototype on a generic unmodified smartphone in order to reduce the barrier for adoption.

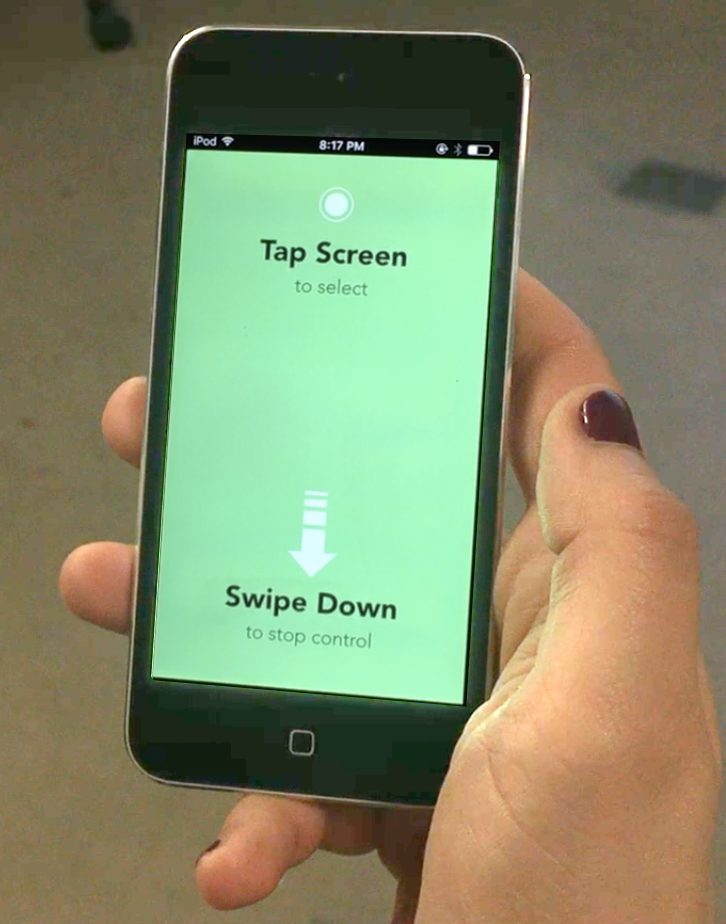

Active state of the TEG prototype

Active state of the TEG prototype

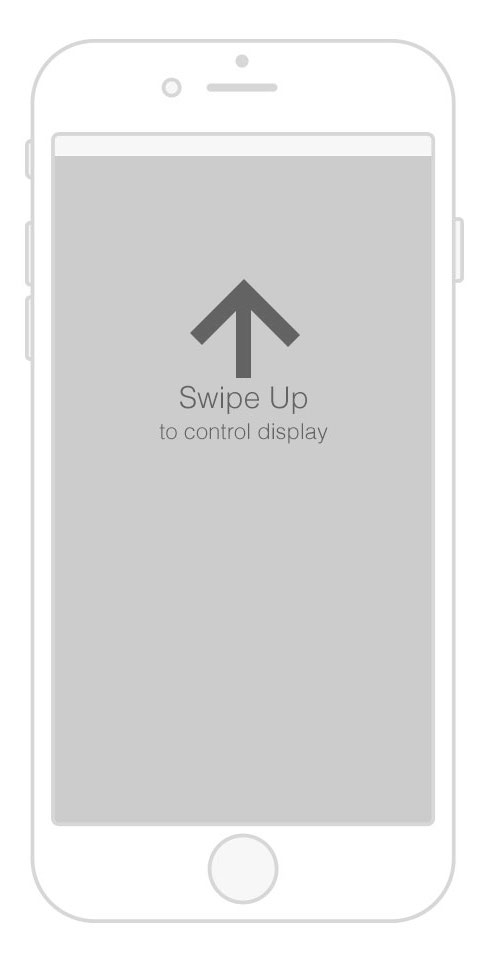

Inactive state of the TEG prototype

Inactive state of the TEG prototype

The user can hold the phone like a remote to control a standalone display. The prototype’s functionality currently focuses on the most salient controls – pointing and clicking. TEG could, however, be further developed to detect more sophisticated commands (e.g waving left from waving right), along with shape detection (e.g drawing a circle).

The TEG prototype tracks the user’s movements and direction through the smartphone’s gyroscopic and acceleration data. To start/stop gesture tracking, the user executes the virtual clutch and swipes up/down on the smartphone’s touchscreen. For both the TEG and the Myo, audio and visual feedback indicate tracking has been toggled. For TEG, we chose the vertical swipe for the virtual clutch because there is more distance a user can swipe her thumb along. The longer threshold could reduce accidental activation of the virtual clutch.

Both screens are minimal in design, using simple color differences to allow easy glancing. Once active, the user can move the smartphone to control a cursor on a projected display. To minimize the need to look at the smartphone during use, in the active state, users can “click” with their cursor by tapping anywhere on the screen.

I intentionally designed the prototype’s interface to be comparable to a gesture-only control scheme; the Myo. Similar to our system, Myo tracks only one arm of the user, limiting the variables to design for, unlike full-body gesture control devices such as the Kinect. More importantly, Myo includes a gesture-based virtual clutch — when the user double-taps her thumb and middle finger, the device is toggled on and off. This gesture virtual clutch provides us with a benchmark to evaluate our TEG control scheme. Users can also make a fist to “click” within a system while using the Myo.

Evaluating Touch-Enhanced Gesture

A within-subject user study was designed to evaluate the TEG prototype in terms of accuracy and speed compared the gesture-only Myo. Participants’ age range was limited to 18-29 in order to focus on people who were more likely to be savvy with technology, as well as more likely to have had exposure to touchscreen and/or gesture control. We recruited 32 participants (average age of 20.2, 63.3% male, 36.7% female). We first collected the participants’ backgrounds and previous usage of gesture control schemes. The study includes two sets of tasks to be completed twice, once with TEG and then with the Myo. The order of the two control schemes is randomized for each participant. Before use, each participant is given a tutorial on each control scheme. During the tasks, the participants control a cursor on a 100” projected screen while standing 12 ft away. After the tasks are completed for each control scheme, I conducted an exit interview with the participant. I collected feedback using a questionnaire on the 7-point Likert scale and allowed for open-ended feedback.

In order to test our hypothesis that TEG’s would be faster and more accurate, I performed analysis of variance (ANOVA) of the data. I also analyzed qualitative data gathered from observations during testing and from the pre- and post-study questionnaires.

Two tasks were designed to represent common actions done with gesture control. The tasks require participants to make selections, move continuously, and activate the virtual clutch to stop and start tracking.

First Tasks, Selection Task, demo

First Tasks, Selection Task, demo

Task 1: Selection Tasks. The participant is asked to “click” each one of these targets in the same order. Once completed, the participant can take a break or proceed to the next screen, with smaller targets. There are 5 different projected displays (1680x1050 resolution) with square targets of 100px, 80px, 60px, 40px, and 20px large. The amount of “clicks”, their coordinates, and the time to “click” all targets are recorded for each participant. To improve accuracy, I adapted Myo’s fist-based selection gesture into a tap on the screen for TEG, to reduce the impact of the selection gesture on the position of the cursor.

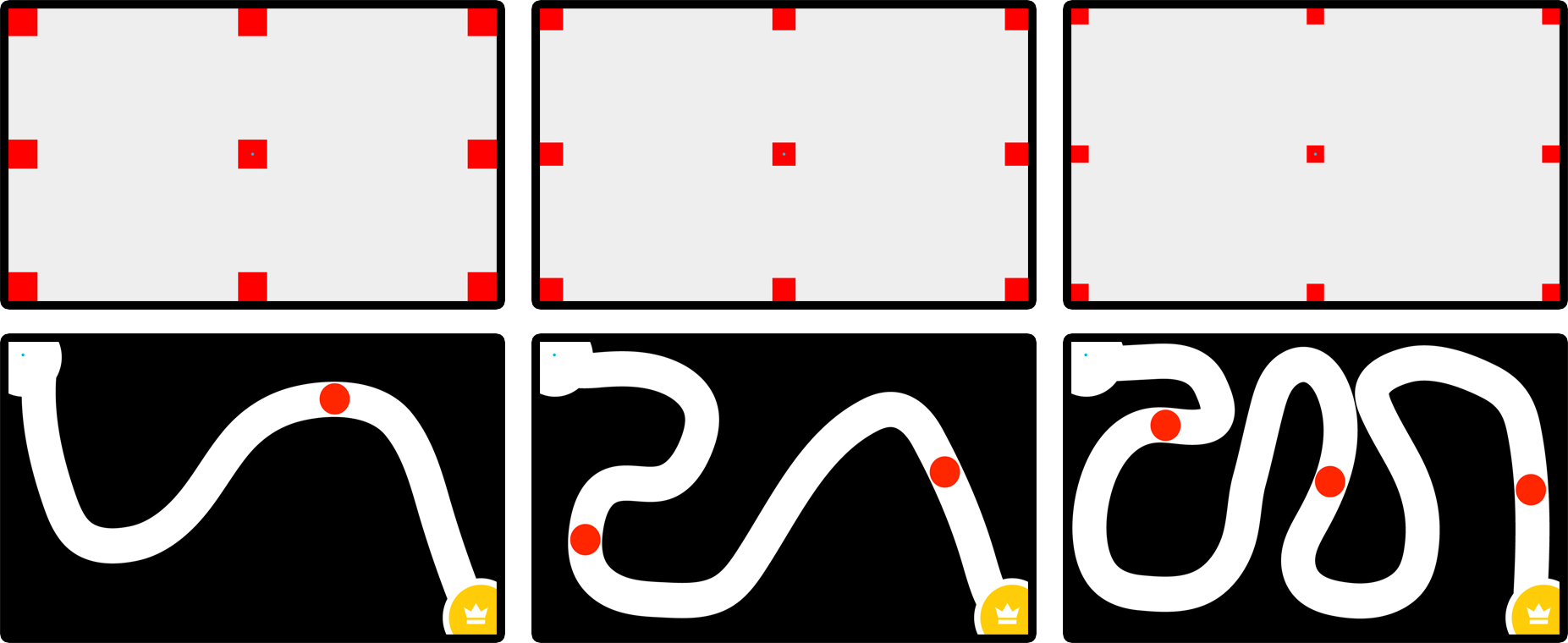

Top Row: Task 1 Selection Tasks; Bottom Row: Task 2 Movement Tasks

Top Row: Task 1 Selection Tasks; Bottom Row: Task 2 Movement Tasks

Task 2: Movement Tasks. Participants are asked to move a cursor on the display through three mazes of increasing difficulty. Participants cannot touch the sides of the maze or the maze will restart. Gesture control requires motion and this can cause fatigue. I wanted to measure how the two gesture control schemes’ virtual clutches perform in motion with a fatiguing user; since this is a common scenario where virtual clutches are used. Within each maze, there are red virtual clutch points. Maze 01 has one point, Maze 02 has two, and Maze 03 has three. The virtual clutch points are in the same locations for both devices. Once the participant moves to these points, she has to use the device’s virtual clutch to pause and start again. The number of attempts to use the virtual clutch command until it is detected by the control scheme is recorded. Participants can take a break at these points or realign themselves. For this task we record the total time to complete each maze, the amount of “clicks”, the amount of restarts if the maze edge was hit, and the participant’s path coordinates through the maze.

Key Takeaways

We saw that the virtual clutch using TEG was more accurate than the gesture-only control scheme. By designing a better virtual clutch, users can stop tracking to prevent the device from executing unintentional movements and reduce false-positive errors caused by LMS.

Also, the TEG control scheme shows significant improvement in selecting targets of 100px, 40px, and 20px size. Based on these results we recommend target sizes and hit states should be >= 60px for an interface powered by TEG to achieve at least a 90% accuracy rating. For gesture-only control schemes, target sizes should be >= 100px.

When it came to executing commands, the TEG control scheme out-performed the gesture-only control scheme. Participants noted that, for both devices, executing a command resulted in unwanted cursor movement. But we believe the movement was reported to be larger and caused more unsuccessful clicks for the gesture-only Myo.

The virtual clutch was not a focus of the selection tasks. The selection tasks measured TEG’s performance in selecting targets. By combining gesture with touch, the accuracy of executing commands within a system, such as selecting, was improved. Also, the time needed to complete tasks was decreased. For the selection task, the amount of “clicks” recorded were only those recognized by the devices. Attempts to click by the participants were not recorded. It was observed during testing that both devices produced false-negative errors. It was, however, very clear during testing that this affected the gesture-only Myo severely. It was observed that the longer selection tasks times for the gesture-only control scheme were not caused by the speed of the hardware or software, but the device’s failure to read the user’s intent due to lack of hardware sensitivity. For many of the targets, participants needed to execute the selection command over and over again with the Myo. It seems that by removing gesture commands and replacing them with touchscreen interaction, we not only improved recognition of the virtual clutch but of selection commands as well. Because of this, we saw a significant improvement in speed to complete all the selection tasks with TEG. Even though there was little difference in the satisfaction for speed for both control schemes, this could be why 76.7% of participants responded that the TEG control scheme was easier to use than the gesture-only control scheme.