UX of VUIs with DiscoverCal

(Case Study)

DiscoverCal is a voice-controlled calendar display for a smart home or office setting. Through this application, we are exploring user experience design techniques for Voice User Interfaces (VUIs). Specifically, myself and a team are designing and evaluating adaptive techniques that automatically customize a VUI to the user to help improve learnability.

Skills Used

- Lean UX

- Usability Study

- Paper Prototype Testing

- Interviews

- Quantitative & Qualitative Data Analysis

- Markov Chain models

- Open & Axial Coding

- HTML5

- CSS3

- JS

- PHP

- Dialogflow API

- Webkit Speech API

- LocalStorage API

This is an ongoing study in collaboration with Anushay Furqan and Jichen Zhu. Our research has been published as two papers so far; Learnability through Adaptive Discovery Tools in Voice User Interfaces (Anushay 2017) and Patterns for How Users Overcome Obstacles in Voice User Interfaces (Myers 2018).

This case study is an overview of these two projects.

The Challenge

We believe that modern VUI conversation design does not consider the individual differences of the user. How people want to explore the VUI’s features and the speed of this interaction may differ. These learning differences when using a new technology have been found in varying areas of Human-Computer Interaction (HCI) research. Even back in the 80’s, software design research found that user’s learning approaches of the technology differed (Carroll and Carrithers 1984). They found one group was more reckless than others when learning the software. The other group liked the play it safe and take it slower. But when the “play it safe” group did encounter an error, it was harder for them to overcome it. An eye-tracking study teaching people how to input text with an eye-tracker found their users picked-up how to use the technology at difference speeds (Aoki 2008). Whether it’s a person’s approach or speed, little is known about the individual differences of VUI users and how we can support them. The “play it safe” people out there may have different needs than their reckless counterparts. But currently we don’t understand these difference, if they exist, and how we can even detect them. Our research studies how people learn an unfamiliar VUI and explores adaptive techniques to support that learning.

Adaptive Techniques are automatic features of a system that customize itself to the user based on collected data. You how Google curates your search results based on your location and data? That’s an GUI adaptive technique.

Getting Started with Smaller Studies

Instead of jumping right into a long study, we decided to start smaller. Our first focus was to better understand VUI users. What are their pain points? How do they perceive adaptive techniques for GUIs in general? Our goals were to better understand how people approach VUIs in general and to develop a working VUI (DiscoverCal) to observe how people reacted to adaptive techniques.

Designing DiscoverCal

To research the UX of an unfamiliar VUI, we built our own VUI, DiscoverCal. We chose to create a calendar application because the calendar domain has a good range of simple to complex task we can observe people using.

Getting to Know Our Audience

To start, a small usability study was conducted using an Amazon Echo simulator to better understand our users and their views on VUIs. Our study focuses on young adults (roughly 18-35 years old). In this study, we observed people attempt to complete tasks they have never done with a VUI. We asked our users to complete tasks like creating timers, editing to-do lists, and asking for the daily news. We found that their technical skill (how good they thought they were with computers) and their previous VUI experience (how often they use a VUI) influenced their approach. We followed up each usability test with an interview to reflect on their experience with the VUI and describe anything they enjoyed or any pain points.

We found varying approaches to figuring out how to structure commands to the Echo Simulator. Some users talked to it like it was a person, and others chose a more mechanical language. We found the more colloquial users did have more difficulty in getting the VUI to understand them. From this study, we also observed how much our users appreciated the ability to interrupt, or “barge-in,” when a VUI was providing feedback. Being able to instantly stop a VUI action/feedback when it was incorrect gave them more control and streamlined the process to correct the error.

Echosim.io Amazon Echo Simulator

Echosim.io Amazon Echo Simulator

Conversation Design

DiscoverCal’s conversation design was based on modern VUI conversation design. We did not want to have too unique of a VUI, and have our results be less applicable to other VUIs. To design DiscoverCal, we started with a survey of modern VUIs and a literature review of academic VUI research. We decided to make our own because as VUIs grow in popularity, it is becoming harder to find people who have never used Alexa or Google Home. Having our own VUI allows us to observe people who never used the system. It also allows us to completely customize the system. And since we are developing adaptive techniques, we need that flexibility.

DiscoverCal’s Adaptive Menu

DiscoverCal’s Adaptive Menu

We designed our first adaptive technique to support learning DiscoverCal’s features. Based on our initial usability study with the Echo Simulator, we designed a visual adaptive menu for DiscoverCal to support learning new commands. During that study, we heard people struggling with figuring out the commands for the more complex tasks we assigned them. After using a certain number of commands successfully with DiscoverCal, its menu adapts, hides the “learned” commands, and shows newer more complex ones. Our goal with this was to not overwhelm people with a huge menu of all DiscoverCal’s commands and to gradually expose them to new features.

Paper Prototyping and Iterative Design

Our first pass at DiscoverCal’s GUI and conversation design was done through paper prototyping. Developing a VUI is a laborious process, so this low fidelity iteration allowed us to see glaring issues our design posed. During this process, we also make tweaks to DiscoverCal to be tested in the next paper prototype. Users were instructed to only use their voice to control the prototype and to verbalize any of their thoughts during the study. The paper prototyping proctors had a sound board and a script to simulate DiscoverCal’s response.

Paper Prototype Testing: Opening a pop-up and swapping the menu

Paper Prototype Testing: Opening a pop-up and swapping the menu

We found that our adaptive menu was not transparent enough for our users. Even though the menu was swapped by hand in front of the user, the change was still occasionally missed. And even when it was noticed, users were not sure why the menu changed. We decided to address this in the developed version of DiscoverCal by using animation to direct attention to the menu as it swapped. We also added a quick message explaining the change. You can see these change in the gif above.

Paper Prototype testing also revealed issues in our GUI design. More attention was needed in our iconography design in our menu to show our users the menu contained commands they could say to DiscoverCal. We also found commands in the menu were getting lost due to a lack of visual hierarchy. We altered the colors used and typography treatment of the menu text to improve this hierarchy. We found in future studies these changes did address the concerns uncovered from the paper prototype tests.

Actually Developing DiscoverCal

DiscoverCal was created using front-end web development technologies. DiscoverCal is a web application using HTML5, CSS3, JS & jQuery, and PHP. Automatic Speech Recognition (ASR) is handled by Chrome’s Web Speech API and Natural Language Processing (NLP) by Dialogflow. The calendar-side of DiscoverCal is handled through Google Calendar’s API.

Evaluating DiscoverCal’s UX and Adaptive Techniques

After our initial modern VUI usability study and paper prototype tests, we created a working prototype of DiscoverCal. With this prototype, we designed a user study to evaluate the system’s usability. We wanted to look beyond initial use of the system, so each participant used DiscoverCal for three sessions over the course of one week. During each session, participants were given tasks to complete with DiscoverCal. Participants were asked to think aloud through the session and each session was ended with an interview reflecting on that day’s events.

Adaptive Techniques Results

Unfortunately, based on the usage data and interview data from our 24 participants, we found that despite our changes from the paper prototyping session, the adaptive menu lacked transparency. By adding the menu animation when swapping, we found most participants did notice the change, but why it changed was still unclear. The rules that the adaptive techniques were based on, and when a command was marked as “learned,” were still not obvious to our participants. However, this study is just one in a bigger project. The text that appeared when the menu changed explaining the reason for the change, was not enough. Participants would not read this text or miss it. Based on this user study, we are now designing new adaptive techniques that focus on transparency. Instead of relying on a text pop-up, we are exploring incorporating audio and spoken feedback to alert the user why these changes are occurring.

As for individual differences, in our interviews we heard varying opinions on the adaptive menu. Some liked the idea of having more commands surfaced this way, some wanted more control over when they changed, and others thought the changes happened to quickly or slowly. These interviews strengthened our belief that the individual differences of VUI users is under explored. There is a potential to support these differences in VUI design.

UX Results

As mentioned, we are very interested in how people learn unfamiliar design. After conducting our formal user study, we transcribed the usability studies and interviews of each participants. We relooked at the data and focused on categorizing the obstacles the user faced and the tactics they employed to overcome them. We hope by understand our users’ strategies more, we could support them when facing an obstacle. Each transcript was coded, a final list of obstacles and tactics was created, and the transcripts were recoded with this list.

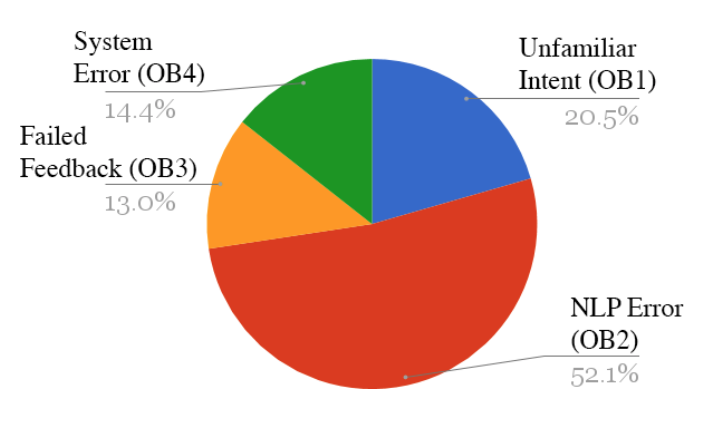

Obstacles

- Unfamiliar Intent - Participant is not fully aware of what the VUI system can do and/or how to structure verbal commands.

- NLP Error - NLP Error obstacles occur when the NLP “misheard” the participant and/or maps their phrase to the command. This is an error in the ASR or NLP pipeline.

- Failed Feedback - Participants ignored or misinterpreted audio or visual feedback causing errors. This is a weakness in the VUIs design that needs to be addressed.

- System Error - A flaw/bug in the VUI system’s architecture outside of ASR and NLP that disrupts the user’s experience.

Figure 1. % of Total Obstacles Recorded (146)

Figure 1. % of Total Obstacles Recorded (146)

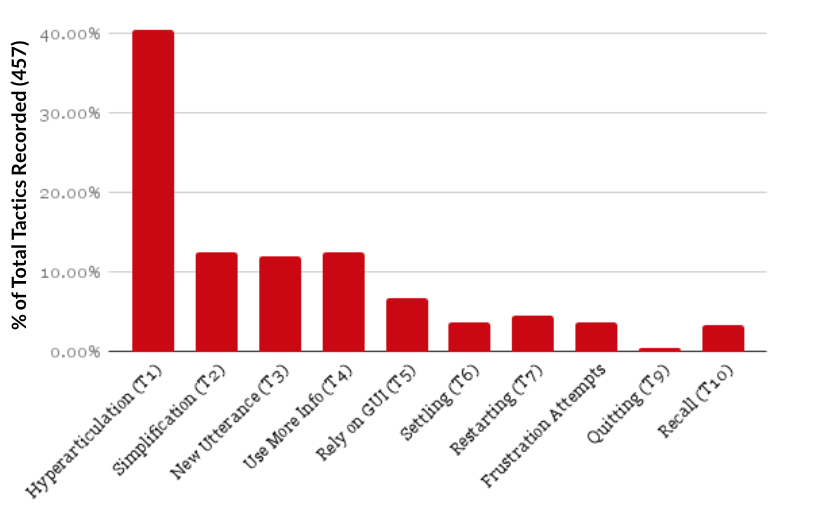

Figure 2. Distribution of total tactics recorded across all participants

Figure 2. Distribution of total tactics recorded across all participants

Tactics

- Hyperarticulation

- Simplification

- New Utterance

- Use More Info

- Rely on GUI

- Settling

- Restarting

- Frustration Attempts

- Quitting

- Recall

If you want to read more about each tactic, please feel free to read our paper about this study.

Based on these categories, we found that tactics 2-3 were exploration tactics. These were varying forms of participants “guessing” what DiscoverCal could do and how to say it. Tactics 6-9 were heavily coupled with frustration and confusion. These were “fallback” tactics our participants used and were more of a last resort than their initial tactic. By looking at the obstacles and the tactics used to overcome them, we found:

#1 While NLP Error obstacles are the most common, the other obstacles still cause frustration and confusion to our participants. As mentioned, tactics 6-9 where observed to be coupled with frustration and confusion. These tactics make up a larger percentage of the total used for Unfamiliar Intent and System Error obstacles. There is a sentiment in VUI design that all we need is better NLP and ASR. And once machine can hear us perfectly, there will be no issues. But even if we remove all the obstacles caused by NLP errors, we are still left with obstacles that detrimental to a user’s experience.

#2 We also observed our participants exhibit an exploratory approach in overcoming obstacles by using the exploration tactics (T2-4). This pattern does not currently align with how we support people to learn a new VUI. Right now, Siri and Alexa, ask users to memorize a list or open their companion app. We need future research on supporting this exploration pattern instead of forcing people to do it our way.

Key Takeaways

So far, we have conducted a usability study on modern VUIs, paper prototype testing, and a formal evaluation of a working DiscoverCal prototype. We are still working with DiscoverCal to uncover individual learning differences of VUIs and how to support them. Based on our studies we present the following:

#1 Consider the individual - This is our main focus, but we see no reason other VUI designers cannot think more about individual preferences right now. VUI users are vastly different. NLP struggles with understanding all the different ways a person can say one statement. But there are more differences than how we speak. How we problem solve and how we prefer to machines to speak to us may differ too. When designing a VUI, consider how the conversation you are creating would appeal to different personalities. Do you support the “reckless” and the “playing-it-safe” users? Can you provide both with safety nets as they explore your VUI? Is your feedback design too explicit and lengthy to kept the attention of those who blaze through your VUI and skip the tutorials? Our research found that people exhibit exploratory patterns when learning a VUI. How can we support this pattern? And is one pattern the same for all users? Our research will keep pushing towards uncovering ways we can support these different VUI user.

#2 Transparency is important in adaptive design - The evaluation of our adaptive technique found that users were confused by it. Users were not sure why DiscoverCal’s menu changed. Making it clear why the system is adapting, and what the users’ role is, can help user create a more correct mental model of the system. Our design included a text popup that explained the change to our users. But this popup was ignored or missed. This solution is seen frequently in adaptive GUIs. For example, in the image below we see my YouTube Dashboard has adapted the content on the page based on my video history. It also says, “Recommended channel for you” to help increase the transparency as to why that channel is on my YouTube dashboard.

My Personal YouTube Dashboard

My Personal YouTube Dashboard

But in our VUI/GUI system, this approach was not well received. We believe a non-text solution should be explored. If DiscoverCal explicitly stated in speech that is was changing its menu, perhaps it would not have been as easily missed. Our point is not that speech feedback is the answer, but the text popup (which works for GUIs) is not. More research is needed on designing for transparency for adaptive design techniques in a VUI.

Next Steps

Our main focus is still supporting individual differences in learning a VUI. Our next study will attempt to identify these differences by analyzing user characteristics and performance metrics. We hope to design an adaptive version of DiscoverCal that can detect these difference based on usage data and cater the system the user.

References

- H. Aoki, J. P. Hansen, and K. Itoh, “Learning to interact with a computer by gaze,” Behav. Inf. Technol., vol. 27, no. 4, pp. 339–344, 2008.

- J. M. Carroll and C. Carrithers, “Training wheels in a user interface,” Commun. ACM, vol. 27, no. 8, pp. 800–806, 1984.

- A. Furqan, C. Myers, and J. Zhu, “Learnability through Adaptive Discovery Tools in Voice User Interfaces,” Proc. 2017 CHI Conf. Ext. Abstr. Hum. Factors Comput. Syst. - CHI EA ’17, pp. 1617–1623, 2017.

- C. Myers, A. Furqan, J. Nebolsky, K. Caro, and J. Zhu, “Patterns for How Users Overcome Obstacles in Voice User Interfaces,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, 2018, p. 6:1–6:7.